10 gewijzigde bestanden met toevoegingen van 24 en 24 verwijderingen

BIN

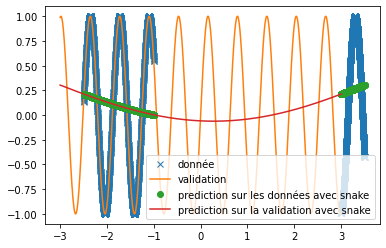

code/fonctions_activations_classiques/prediction_x2_snake.png

Bestand weergeven

BIN

code/fonctions_activations_classiques/prediction_x2_snake_v2.png

Bestand weergeven

BIN

code/fonctions_activations_classiques/prediction_x2_x+sin.png

Bestand weergeven

+ 7

- 7

code/fonctions_activations_classiques/sin.py

Bestand weergeven

| @@ -34,13 +34,13 @@ model_sin=tf.keras.models.Sequential() | |||

| model_sin.add(tf.keras.Input(shape=(1,))) | |||

| model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(8, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(512, activation=sin)) | |||

| model_sin.add(tf.keras.layers.Dense(1)) | |||

| opti=tf.keras.optimizers.Adam() | |||

+ 3

- 2

code/fonctions_activations_classiques/snake.py

Bestand weergeven

| @@ -17,10 +17,10 @@ from Creation_donnee import * | |||

| import numpy as np | |||

| w=10 | |||

| n=20000 | |||

| n=2000 | |||

| #création de la base de donnéé | |||

| X,Y=creation_sin(-2.5,-1,n,w) | |||

| X2,Y2=creation_sin(1,1.5,n,w) | |||

| X2,Y2=creation_sin(3,3.5,n,w) | |||

| X=np.concatenate([X,X2]) | |||

| Y=np.concatenate([Y,Y2]) | |||

| @@ -30,6 +30,7 @@ Xv,Yv=creation_sin(-3,3,n,w) | |||

| model_sin=tf.keras.models.Sequential() | |||

| model_sin.add(tf.keras.Input(shape=(1,))) | |||

BIN

code/fonctions_activations_classiques/snake_donnée_augenté.png

Bestand weergeven

+ 4

- 5

code/fonctions_activations_classiques/swish.py

Bestand weergeven

| @@ -15,16 +15,15 @@ from Creation_donnee import * | |||

| import numpy as np | |||

| w=10 | |||

| n=2000 | |||

| n=20000 | |||

| #création de la base de donnéé | |||

| X,Y=creation_sin(-1.5,-1,n,w) | |||

| X2,Y2=creation_sin(1,1.5,n,w) | |||

| X,Y=creation_x2(-2.5,-1,n) | |||

| X2,Y2=creation_x2(1,1.5,n) | |||

| X=np.concatenate([X,X2]) | |||

| Y=np.concatenate([Y,Y2]) | |||

| n=10000 | |||

| Xv,Yv=creation_sin(-3,3,n,w) | |||

| Xv,Yv=creation_x2(-3,3,n) | |||

BIN

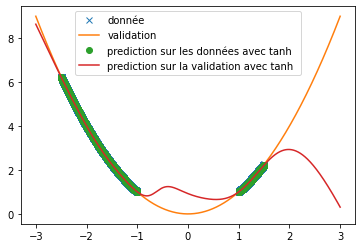

code/fonctions_activations_classiques/tanh.png

Bestand weergeven

+ 5

- 5

code/fonctions_activations_classiques/tanh_vs_ReLU.py

Bestand weergeven

| @@ -14,15 +14,15 @@ from fonction_activation import * | |||

| from Creation_donnee import * | |||

| import numpy as np | |||

| w=10 | |||

| n=2000 | |||

| n=20000 | |||

| #création de la base de donnéé | |||

| X,Y=creation_sin(-1.5,-1,n,w) | |||

| X2,Y2=creation_sin(1,1.5,n,w) | |||

| X,Y=creation_x2(-2.5,-1,n) | |||

| X2,Y2=creation_x2(1,1.5,n) | |||

| X=np.concatenate([X,X2]) | |||

| Y=np.concatenate([Y,Y2]) | |||

| n=10000 | |||

| Xv,Yv=creation_sin(-3,3,n,w) | |||

| Xv,Yv=creation_x2(-3,3,n) | |||

| @@ -46,7 +46,7 @@ model_ReLU.summary() | |||

| model_ReLU.fit(X, Y, batch_size=16, epochs=50, shuffle='True',validation_data=(Xv, Yv)) | |||

| model_ReLU.fit(X, Y, batch_size=16, epochs=5, shuffle='True',validation_data=(Xv, Yv)) | |||

+ 5

- 5

code/fonctions_activations_classiques/x_sin.py

Bestand weergeven

| @@ -24,15 +24,15 @@ from Creation_donnee import * | |||

| import numpy as np | |||

| w=10 | |||

| n=20 | |||

| n=20000 | |||

| #création de la base de donnéé | |||

| X,Y=creation_sin(-1.5,-1,n,w) | |||

| X2,Y2=creation_sin(1,1.5,n,w) | |||

| X,Y=creation_x2(-2.5,-1,n) | |||

| X2,Y2=creation_x2(1,1.5,n) | |||

| X=np.concatenate([X,X2]) | |||

| Y=np.concatenate([Y,Y2]) | |||

| n=10000 | |||

| Xv,Yv=creation_sin(-3,3,n,w) | |||

| Xv,Yv=creation_x2(-3,3,n) | |||

| @@ -55,7 +55,7 @@ model_xsin.compile(opti, loss='mse', metrics=['accuracy']) | |||

| model_xsin.summary() | |||

| model_xsin.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||

| model_xsin.fit(X, Y, batch_size=16, epochs=100, shuffle='True',validation_data=(Xv, Yv)) | |||

Laden…