二進制

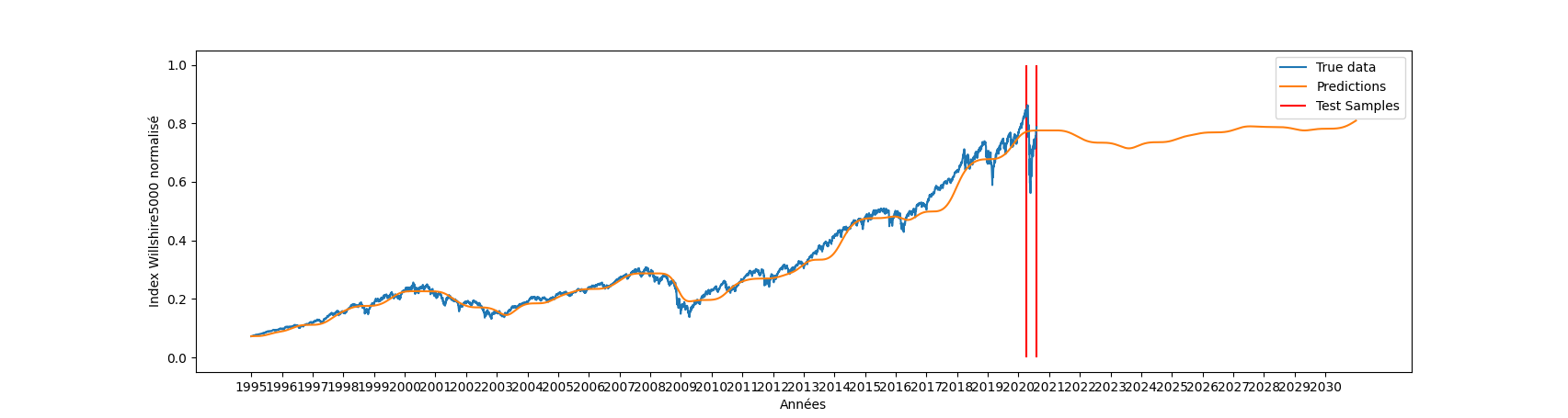

code/wilshire_5000/30aplot1.png

查看文件

二進制

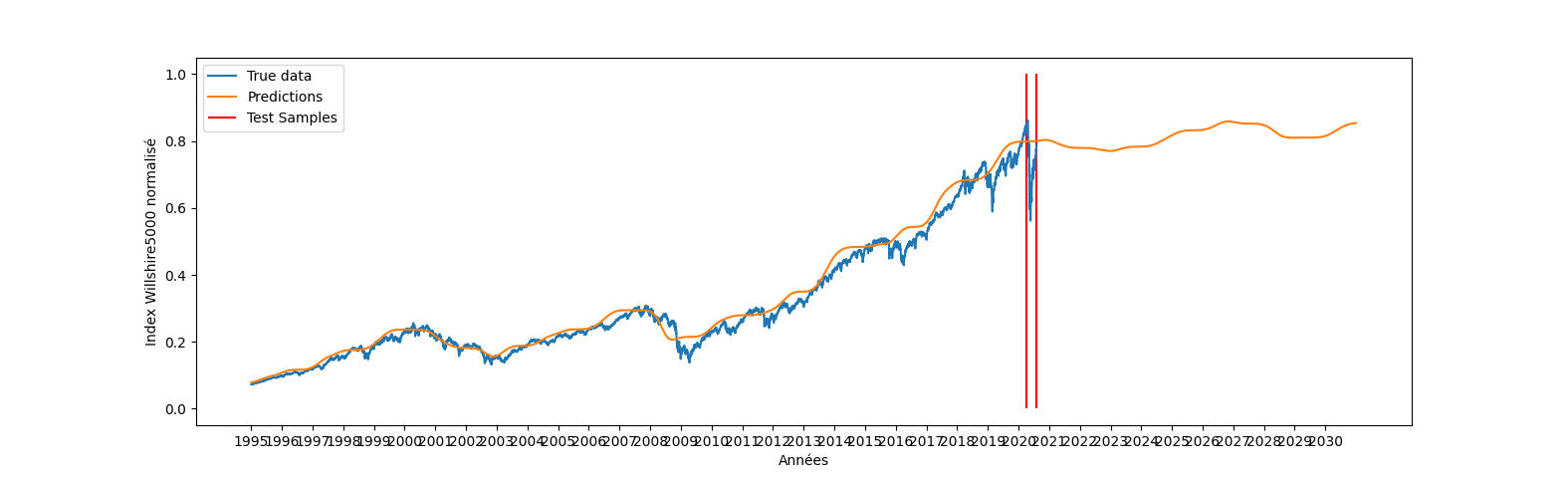

code/wilshire_5000/30aplot2.png

查看文件

+ 4

- 0

code/wilshire_5000/README.md

查看文件

| @@ -1,5 +1,9 @@ | |||

| # wilshire_5000 | |||

| Classical Installations Required : numpy, pandas, tensorflow and matplotlib (and warnings) | |||

| Other Installation : statsmodels for ARIMA predictions (pip install statsmodels) | |||

| ## Données | |||

| [Données](https://fred.stlouisfed.org/series/WILL5000INDFC) | |||

+ 7

- 0

code/wilshire_5000/Snake100a/keras_metadata.pb

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/Snake100a/saved_model.pb

查看文件

二進制

code/wilshire_5000/Snake100a/variables/variables.data-00000-of-00001

查看文件

二進制

code/wilshire_5000/Snake100a/variables/variables.index

查看文件

+ 7

- 0

code/wilshire_5000/Snake10a/keras_metadata.pb

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/Snake10a/saved_model.pb

查看文件

二進制

code/wilshire_5000/Snake10a/variables/variables.data-00000-of-00001

查看文件

二進制

code/wilshire_5000/Snake10a/variables/variables.index

查看文件

+ 7

- 0

code/wilshire_5000/Snake1a/keras_metadata.pb

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/Snake1a/saved_model.pb

查看文件

二進制

code/wilshire_5000/Snake1a/variables/variables.data-00000-of-00001

查看文件

二進制

code/wilshire_5000/Snake1a/variables/variables.index

查看文件

+ 7

- 0

code/wilshire_5000/Snake20a/keras_metadata.pb

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/Snake20a/saved_model.pb

查看文件

二進制

code/wilshire_5000/Snake20a/variables/variables.data-00000-of-00001

查看文件

二進制

code/wilshire_5000/Snake20a/variables/variables.index

查看文件

+ 7

- 0

code/wilshire_5000/Snake30a/keras_metadata.pb

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/Snake30a/saved_model.pb

查看文件

二進制

code/wilshire_5000/Snake30a/variables/variables.data-00000-of-00001

查看文件

二進制

code/wilshire_5000/Snake30a/variables/variables.index

查看文件

+ 7

- 0

code/wilshire_5000/Snake50a/keras_metadata.pb

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/Snake50a/saved_model.pb

查看文件

二進制

code/wilshire_5000/Snake50a/variables/variables.data-00000-of-00001

查看文件

二進制

code/wilshire_5000/Snake50a/variables/variables.index

查看文件

+ 1305

- 1

code/wilshire_5000/WILL5000INDFC.csv

文件差異過大導致無法顯示

查看文件

二進制

code/wilshire_5000/a_plot.png

查看文件

二進制

code/wilshire_5000/dataset.npy

查看文件

+ 0

- 11

code/wilshire_5000/grab_data.py

查看文件

| @@ -1,11 +0,0 @@ | |||

| import pandas | |||

| import numpy as np | |||

| csv = pandas.read_csv("WILL5000INDFC.csv") | |||

| extraction = csv["WILL5000INDFC"].tolist() | |||

| for i in range(len(extraction)) : | |||

| if extraction[i] == "." : | |||

| extraction[i] = extraction[i-1] | |||

| extraction = np.array(extraction).astype(float) | |||

| print(extraction) | |||

| np.save("dataset.npy", extraction) | |||

+ 183

- 0

code/wilshire_5000/nn.py

查看文件

| @@ -0,0 +1,183 @@ | |||

| import numpy as np | |||

| import tensorflow as tf | |||

| import pandas as pd | |||

| import matplotlib.pyplot as plt | |||

| import wilshire | |||

| from statsmodels.tsa.arima.model import ARIMA | |||

| ### Fonctions d'activations ### | |||

| def snake(x): | |||

| return(x+(tf.math.sin(30*x)**2)/30) | |||

| def sinus(x): | |||

| return(tf.math.sin(x)) | |||

| def sinus_cosinus(x): | |||

| return(tf.math.sin(x)+tf.math.cos(x)) | |||

| def swish(x): | |||

| return(x*tf.math.sigmoid(x)) | |||

| def prepare_data(filename="WILL5000INDFC.csv"): | |||

| """ | |||

| Prepare data by preprocessing, normalizing and cutting it in train and test sets | |||

| Return x and y train and test sets, as well as the maximum for later plots and the index separating both sets | |||

| """ | |||

| df_train,df_test,index = wilshire.preprocess(filename) | |||

| x_train = np.arange(df_train.shape[0]) | |||

| maximum = np.max(x_train) | |||

| x_train = x_train / maximum | |||

| y_train=df_train["WILL5000INDFC"] | |||

| y_train.to_numpy() | |||

| x_test = np.arange(df_train.shape[0]+1,df_train.shape[0]+df_test.shape[0]+1) | |||

| y_test = df_test["WILL5000INDFC"] | |||

| y_test.to_numpy() | |||

| x_test=x_test / maximum | |||

| return x_train,x_test,y_train,y_test,maximum,index | |||

| def arima_pred(y_train,y_test,orders=[[2,1,1],[2,2,1],[3,1,1],[2,1,2]],n=5): | |||

| """ | |||

| Computes the ARIMA errors (mse) for several orders to compare with the article | |||

| """ | |||

| mse=[] | |||

| for order in orders : | |||

| mean_err= [] | |||

| for k in range(n): | |||

| train = y_train | |||

| preds = [] | |||

| for test in range(len(y_test)): | |||

| model = ARIMA(train, order=(order[0],order[1],order[2])) | |||

| model = model.fit() | |||

| output = model.forecast() | |||

| #print(output) | |||

| preds.append(output) | |||

| #train.append(y_test[te | |||

| mean_err.append((np.square(np.array(preds) - np.array(y_test))).mean()) | |||

| mse.append([np.array(mean_err).mean(),np.array(mean_err).std()]) | |||

| return(mse) | |||

| def create_model(activation): | |||

| """ | |||

| Create the neural network with the requested activation function | |||

| """ | |||

| model = tf.keras.Sequential() | |||

| model.add(tf.keras.layers.Dense(1,input_shape=[1,],activation=activation)) | |||

| model.add(tf.keras.layers.Dense(64,activation=activation)) | |||

| model.add(tf.keras.layers.Dense(64,activation=activation)) | |||

| model.add(tf.keras.layers.Dense(1)) | |||

| opt = tf.keras.optimizers.SGD(learning_rate=0.01,momentum=0.8) | |||

| model.compile(optimizer=opt, loss='mse') | |||

| model.build() | |||

| model.summary() | |||

| return model | |||

| def training_testing(n=5,activations = [tf.keras.activations.relu,swish,sinus_cosinus,sinus,snake],epochs = 50): | |||

| """ | |||

| Trains models and computes means and std of test errors on n tries for each activation function requested. | |||

| """ | |||

| x_train,x_test,y_train,y_test,maximum,index = prepare_data(filename="WILL5000INDFC.csv") | |||

| models = [] | |||

| errors_train,errors_test = [],[] | |||

| mean_y_train,mean_y_test,std_y_test=[],[],[] | |||

| for activation in activations : | |||

| y_train_5=[] | |||

| y_test_5=[] | |||

| errors_train_5=[] | |||

| errors_test_5=[] | |||

| for k in range(n): | |||

| model = create_model(activation) | |||

| model.fit(x_train,y_train, batch_size=1, epochs=epochs) | |||

| y_pred_test = model.predict(x_test) | |||

| y_pred_train = model.predict(x_train) | |||

| y_train_5.append(y_pred_train) | |||

| y_test_5.append(y_pred_test) | |||

| errors_test_5.append(model.evaluate(x_test,y_test)) | |||

| errors_train_5.append(model.evaluate(x_train,y_train)) | |||

| models.append(model) | |||

| mean_y_train.append(np.mean(y_train_5,axis=0)) | |||

| mean_y_test.append(np.mean(y_test_5,axis=0)) | |||

| std_y_test.append(np.std(y_test_5,axis=0)) | |||

| errors_train.append([np.mean(errors_train_5),np.std(errors_train_5)]) | |||

| errors_test.append([np.mean(errors_test_5),np.std(errors_test_5)]) | |||

| # y_preds_train.append(y_pred_train) | |||

| # y_preds_test.append(y_pred_test) | |||

| return models,errors_train,errors_test | |||

| def final_plot(models,errors_test,arima_err,activations=["ReLU","Swish","Sinus Cosinus","Sinus","Snake"],orders_ARIMA = ["[2,1,1]","[2,2,1]","[3,1,1]","[2,1,2]"]): | |||

| """ | |||

| Prints the results to compare with the table of the article and plot the same plot as the article | |||

| """ | |||

| x_train,x_test,y_train,y_test,maximum,index = prepare_data(filename="WILL5000INDFC.csv") | |||

| x = np.arange(9000) ## 9000 data points bring us to ~2031 to try and predict future data | |||

| x_n = x / maximum | |||

| future_preds = models[-1].predict(x_n) | |||

| y_true = np.concatenate((y_train,y_test)) | |||

| x_cut = np.arange(x_train.shape[0]+x_test.shape[0]) | |||

| print("----- ARIMA Test MSE -----") | |||

| for k in range(len(orders_ARIMA)): | |||

| print("ARIMA"+orders_ARIMA[k]+" : "+str(arima_err[k])) | |||

| print("----- DNN Test MSE -----") | |||

| for k in range(len(activations)): | |||

| print("DNN "+activations[k]+" : "+str(errors_test[k])) | |||

| ### PLOT ### | |||

| plt.figure() | |||

| plt.plot(x_cut,y_true,label="True data") | |||

| plt.plot(x,future_preds,label="Predictions") | |||

| plt.xticks(range(0, 9000, 500), range(1995, 2031, 2)) | |||

| plt.xlabel("Années") | |||

| plt.ylabel("Index Willshire5000 normalisé") | |||

| plt.vlines([index,index+85],ymin=0,ymax=1,colors="r",label="Test Samples") | |||

| plt.legend() | |||

| plt.show() | |||

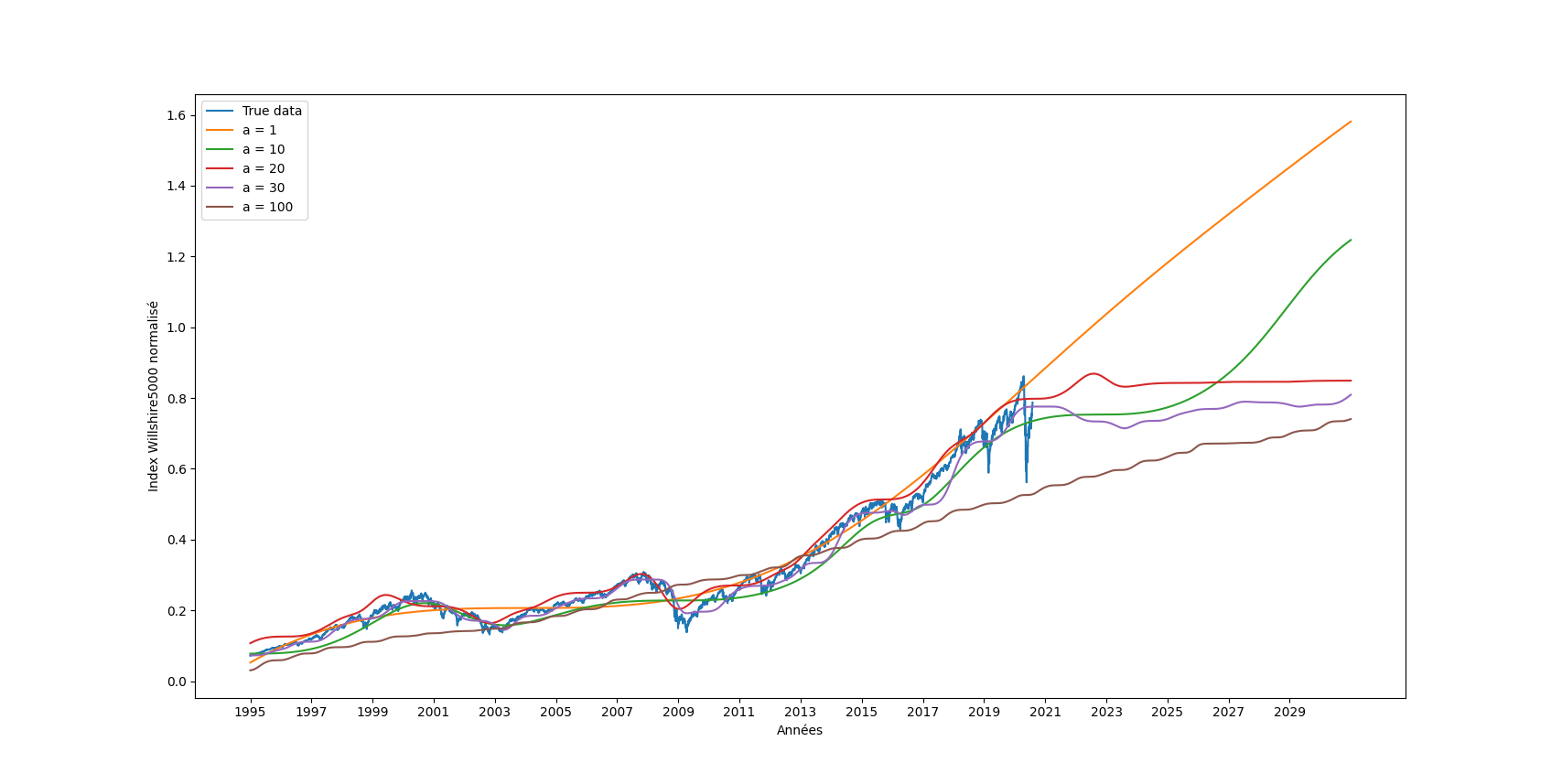

| def plot_all_a(a=["1","10","20","30","100"]): | |||

| """ | |||

| Plots the varying a values plot by loading pre-existing models (they are uploaded on GitHub) | |||

| """ | |||

| models=[] | |||

| for param in a : | |||

| models.append(tf.keras.models.load_model("Snake"+param+"a")) | |||

| x_train,x_test,y_train,y_test,maximum,index = prepare_data(filename="WILL5000INDFC.csv") | |||

| x = np.arange(9000) | |||

| x_n = x / maximum | |||

| y_true = np.concatenate((y_train,y_test)) | |||

| x_cut = np.arange(x_train.shape[0]+x_test.shape[0]) | |||

| future_preds=[] | |||

| for k in range(len(models)): | |||

| future_preds.append(models[k].predict(x_n) ) | |||

| plt.figure() | |||

| plt.plot(x_cut,y_true,label="True data") | |||

| for k in range(len(models)): | |||

| plt.plot(x,future_preds[k],label="a = "+a[k]) | |||

| plt.xticks(range(0, 9000, 500), range(1995, 2031, 2)) | |||

| plt.xlabel("Années") | |||

| plt.ylabel("Index Willshire5000 normalisé") | |||

| plt.legend() | |||

| plt.show() | |||

| # plot_all_a() | |||

+ 177

- 0

code/wilshire_5000/notebook_wilshire.ipynb

文件差異過大導致無法顯示

查看文件

+ 24

- 0

code/wilshire_5000/wilshire.py

查看文件

| @@ -0,0 +1,24 @@ | |||

| import numpy as np | |||

| import tensorflow as tf | |||

| import pandas as pd | |||

| import matplotlib.pyplot as plt | |||

| def parser(path): | |||

| df = pd.read_csv(path,na_values='.') | |||

| #df = df.interpolate() ### Interpolate or dropna for bank holidays | |||

| df = df.dropna().reset_index(drop=True) | |||

| return(df) | |||

| def preprocess(path): | |||

| df = parser(path) | |||

| df_normalized = df[:] | |||

| df_normalized["WILL5000INDFC"]=df_normalized["WILL5000INDFC"]/np.max(df_normalized["WILL5000INDFC"]) | |||

| index_train = int(df_normalized[df_normalized["DATE"]=="2020-01-31"].index.array[0]) | |||

| df_train = df_normalized[:index_train] | |||

| df_test = df_normalized[index_train+1:index_train+85] #Between 02-01 and 05-31 | |||

| return(df_train,df_test,index_train) | |||

Loading…