27 değiştirilmiş dosya ile 217 ekleme ve 88 silme

+ 58

- 0

code/fonctions_activations_classiques/Creation_donnee.py

Dosyayı Görüntüle

| import math as ma | import math as ma | ||||

| len_seq = 10 | len_seq = 10 | ||||

| def creation_sin_RNN(len_seq,tmin,tmax,n,w,a=1,b=0): | def creation_sin_RNN(len_seq,tmin,tmax,n,w,a=1,b=0): | ||||

| Datax, Datay = [], [] | Datax, Datay = [], [] | ||||

| def creation_sin(tmin,tmax,n,w,a=1,c=0): | def creation_sin(tmin,tmax,n,w,a=1,c=0): | ||||

| ## [tmin,tmax] intervalle de création | |||||

| ## n nombre de poins | |||||

| ## w pulsation | |||||

| ## Lx= a*sin+c coordonné y des points | |||||

| ## t coordonné x des points | |||||

| Lx=[] | Lx=[] | ||||

| t = np.linspace(tmin,tmax,n) | t = np.linspace(tmin,tmax,n) | ||||

| for i in t: | for i in t: | ||||

| return(t,Lx) | return(t,Lx) | ||||

| def creation_x_sin(tmin,tmax,n,w,a=1,b=0,c=0): | def creation_x_sin(tmin,tmax,n,w,a=1,b=0,c=0): | ||||

| ## [tmin,tmax] intervalle de création | |||||

| ## n nombre de poins | |||||

| ## w pulsation | |||||

| ## Lx= a*x+b*sin(x)+c coordonné y des points | |||||

| ## t coordonné x des points | |||||

| Lx=[] | Lx=[] | ||||

| t = np.linspace(tmin,tmax,n) | t = np.linspace(tmin,tmax,n) | ||||

| for i in t: | for i in t: | ||||

| return(t,Lx) | return(t,Lx) | ||||

| def creation_x_sin2(tmin,tmax,n,w,a=1,b=1,c=0): | def creation_x_sin2(tmin,tmax,n,w,a=1,b=1,c=0): | ||||

| ## n nombre de poins | |||||

| ## w pulsation | |||||

| ## Lx= a*x+b*sin(x)²+c coordonné y des points | |||||

| ## t coordonné x des points | |||||

| Lx=[] | Lx=[] | ||||

| t = np.linspace(tmin,tmax,n) | t = np.linspace(tmin,tmax,n) | ||||

| for i in t: | for i in t: | ||||

| Lx=np.array(Lx) | Lx=np.array(Lx) | ||||

| return(t,Lx) | return(t,Lx) | ||||

| def creation_x(tmin,tmax,n): | |||||

| ## n nombre de poins | |||||

| ## w pulsation | |||||

| ## Lx= x coordonné y des points | |||||

| ## t coordonné x des points | |||||

| Lx=[] | |||||

| t= np.linspace(tmin,tmax,n) | |||||

| for i in t: | |||||

| Lx.append(i) | |||||

| return(t,np.array(Lx)) | |||||

| def creation_arctan(tmin,tmax,n): | |||||

| ## n nombre de poins | |||||

| ## w pulsation | |||||

| ## Lx= arxtan(x) coordonné y des points | |||||

| ## t coordonné x des points | |||||

| Lx=[] | |||||

| t= np.linspace(tmin,tmax,n) | |||||

| for i in t: | |||||

| Lx.append(np.arctan(i)) | |||||

| return(t,np.array(Lx)) | |||||

| def creation_x2(tmin,tmax,n): | |||||

| ## n nombre de poins | |||||

| ## w pulsation | |||||

| ## Lx= x² coordonné y des points | |||||

| ## t coordonné x des points | |||||

| Lx=[] | |||||

| t= np.linspace(tmin,tmax,n) | |||||

| for i in t: | |||||

| Lx.append(i**2) | |||||

| return(t,np.array(Lx)) | |||||

+ 3

- 3

code/fonctions_activations_classiques/fonction_activation.py

Dosyayı Görüntüle

| def snake(x, alpha=1.0): | |||||

| def snake(x, alpha=5): | |||||

| return (x + tf.sin(x)**2/alpha) | return (x + tf.sin(x)**2/alpha) | ||||

| def x_sin(x,alpha=1.0): | |||||

| def x_sin(x,alpha=5): | |||||

| return (x + tf.sin(x)/alpha) | return (x + tf.sin(x)/alpha) | ||||

| def sin(x,alpha=1.0): | |||||

| def sin(x,alpha=5): | |||||

| return(tf.sin(x)/alpha) | return(tf.sin(x)/alpha) | ||||

+ 19

- 0

code/fonctions_activations_classiques/notebook.py

Dosyayı Görüntüle

| # -*- coding: utf-8 -*- | |||||

| """ | |||||

| Created on Mon Jan 3 11:48:21 2022 | |||||

| @author: virgi | |||||

| """ | |||||

| ## Execution des codes montrant réalisant l'apprentissages avec differentes fonction d'activation. | |||||

| ## Les bases d'entrainement et d'aprendtissagfe peuvent etre changé dans chaque ficher , de meme que | |||||

| ## l'architecture des réseaux utilisé | |||||

| exec(open('x_sin.py').read()) | |||||

| exec(open('sin.py').read()) | |||||

| exec(open('snake.py').read()) | |||||

| exec(open('swish.py').read()) | |||||

| exec(open('tanh_vs_ReLU').read()) | |||||

BIN

code/fonctions_activations_classiques/prediction_sinus.png

Dosyayı Görüntüle

BIN

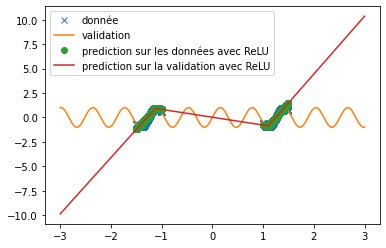

code/fonctions_activations_classiques/prediction_sinus_ReLU.png

Dosyayı Görüntüle

BIN

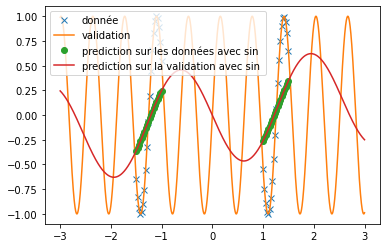

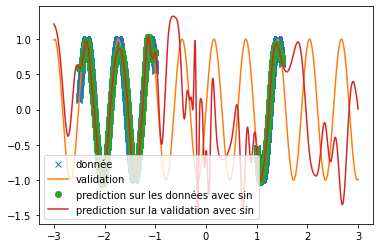

code/fonctions_activations_classiques/prediction_sinus_sin.png

Dosyayı Görüntüle

BIN

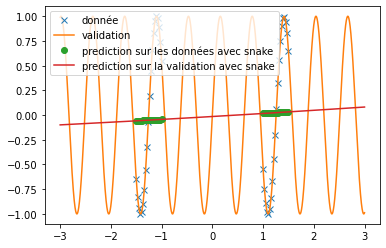

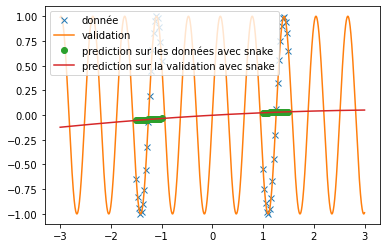

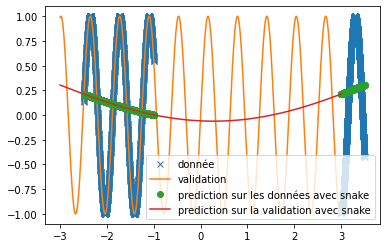

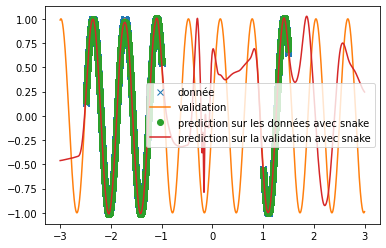

code/fonctions_activations_classiques/prediction_sinus_snake.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_sinus_swish.png

Dosyayı Görüntüle

BIN

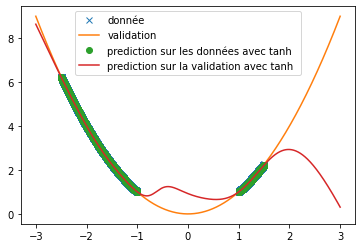

code/fonctions_activations_classiques/prediction_sinus_tanh.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_sinus_x+sin.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_snake_8neuronne.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_x2_ReLU.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_x2_sin.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_x2_snake_v2.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_x2_swish.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_x2_tanh.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/prediction_x2_x+sin.png

Dosyayı Görüntüle

+ 16

- 21

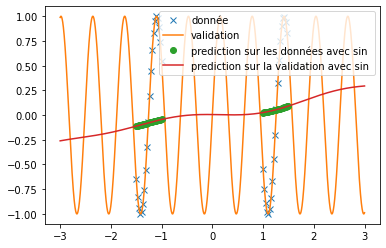

code/fonctions_activations_classiques/sin.py

Dosyayı Görüntüle

| from Creation_donnee import * | from Creation_donnee import * | ||||

| import numpy as np | import numpy as np | ||||

| n=20 | |||||

| #création de la base de donnéé | |||||

| X,Y=creation_sin(-15,-8,n,1,) | |||||

| X2,Y2=creation_sin(10,18,n,1,) | |||||

| w=10 | |||||

| n=2000 | |||||

| #création de la base de donnée | |||||

| X,Y=creation_sin(-2.5,-1,n,w) | |||||

| X2,Y2=creation_sin(1,1.5,n,w) | |||||

| X=np.concatenate([X,X2]) | X=np.concatenate([X,X2]) | ||||

| Y=np.concatenate([Y,Y2]) | Y=np.concatenate([Y,Y2]) | ||||

| n=10000 | n=10000 | ||||

| Xv,Yv=creation_sin(-20,20,n,1) | |||||

| Xv,Yv=creation_sin(-3,3,n,w) | |||||

| model_sin.add(tf.keras.Input(shape=(1,))) | model_sin.add(tf.keras.Input(shape=(1,))) | ||||

| model_sin.add(tf.keras.layers.Dense(4, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(4, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(4, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(4, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(4, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(512, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(1)) | model_sin.add(tf.keras.layers.Dense(1)) | ||||

| opti=tf.keras.optimizers.Adam() | opti=tf.keras.optimizers.Adam() | ||||

| model_sin.summary() | model_sin.summary() | ||||

| model_sin.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_sin.fit(X, Y, batch_size=16, epochs=150, shuffle='True',validation_data=(Xv, Yv)) | |||||

| plt.figure() | plt.figure() | ||||

| plt.plot(X,Y,'x',label='donnée') | plt.plot(X,Y,'x',label='donnée') | ||||

| plt.plot(Xv,Yv,label="validation") | plt.plot(Xv,Yv,label="validation") | ||||

| plt.plot(X,Y_predis_sin,'o',label='prediction sur les donné avec sin comme activation') | |||||

| plt.plot(Xv,Y_predis_validation_sin,label='prediction sur la validation avec sin comme activation') | |||||

| plt.plot(X,Y_predis_sin,'o',label='prediction sur les données avec sin ') | |||||

| plt.plot(Xv,Y_predis_validation_sin,label='prediction sur la validation avec sin') | |||||

| plt.legend() | plt.legend() | ||||

| plt.show() | plt.show() | ||||

| """ | |||||

| Created on Wed Nov 24 16:53:37 2021 | |||||

| @author: virgi | |||||

| """ | |||||

BIN

code/fonctions_activations_classiques/sinus_quasi_fonctionnelle.png

Dosyayı Görüntüle

+ 73

- 0

code/fonctions_activations_classiques/snake.py

Dosyayı Görüntüle

| # -*- coding: utf-8 -*- | |||||

| """ | |||||

| Created on Wed Nov 24 16:58:44 2021 | |||||

| @author: virgi | |||||

| """ | |||||

| import tensorflow as tf | |||||

| import matplotlib.pyplot as plt | |||||

| from fonction_activation import * | |||||

| from Creation_donnee import * | |||||

| import numpy as np | |||||

| w=10 | |||||

| n=2000 | |||||

| #création de la base de donnéé | |||||

| X,Y=creation_sin(-2.5,-1,n,w) | |||||

| X2,Y2=creation_sin(3,3.5,n,w) | |||||

| X=np.concatenate([X,X2]) | |||||

| Y=np.concatenate([Y,Y2]) | |||||

| n=10000 | |||||

| Xv,Yv=creation_sin(-3,3,n,w) | |||||

| model_sin=tf.keras.models.Sequential() | |||||

| model_sin.add(tf.keras.Input(shape=(1,))) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(512, activation=sin)) | |||||

| # model_sin.add(tf.keras.layers.Dense(64, activation=sin)) | |||||

| model_sin.add(tf.keras.layers.Dense(512, activation=snake)) | |||||

| model_sin.add(tf.keras.layers.Dense(1)) | |||||

| opti=tf.keras.optimizers.Adam() | |||||

| model_sin.compile(opti, loss='mse', metrics=['accuracy']) | |||||

| model_sin.summary() | |||||

| model_sin.fit(X, Y, batch_size=16, epochs=100, shuffle='True',validation_data=(Xv, Yv)) | |||||

| Y_predis_sin=model_sin.predict(X) | |||||

| Y_predis_validation_sin=model_sin.predict(Xv) | |||||

| plt.figure() | |||||

| plt.plot(X,Y,'x',label='donnée') | |||||

| plt.plot(Xv,Yv,label="validation") | |||||

| plt.plot(X,Y_predis_sin,'o',label='prediction sur les données avec snake') | |||||

| plt.plot(Xv,Y_predis_validation_sin,label='prediction sur la validation avec snake') | |||||

| plt.legend() | |||||

| plt.show() | |||||

BIN

code/fonctions_activations_classiques/snake_donnée_augenté.png

Dosyayı Görüntüle

BIN

code/fonctions_activations_classiques/snake_quasi_fonctionnelle.png

Dosyayı Görüntüle

+ 11

- 18

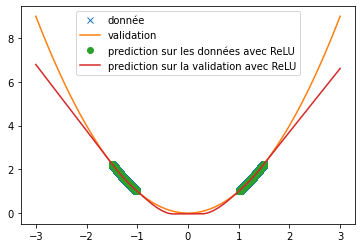

code/fonctions_activations_classiques/snake_vs_ReLU.py

Dosyayı Görüntüle

| from Creation_donnee import * | from Creation_donnee import * | ||||

| import numpy as np | import numpy as np | ||||

| w=10 | |||||

| n=20 | n=20 | ||||

| #création de la base de donnéé | #création de la base de donnéé | ||||

| X,Y=creation_sin(-15,-8,n,1,) | |||||

| X2,Y2=creation_sin(10,18,n,1,) | |||||

| X,Y=creation_sin(-1.5,-1,n,w) | |||||

| X2,Y2=creation_sin(1,1.5,n,w) | |||||

| X=np.concatenate([X,X2]) | X=np.concatenate([X,X2]) | ||||

| Y=np.concatenate([Y,Y2]) | Y=np.concatenate([Y,Y2]) | ||||

| n=10000 | n=10000 | ||||

| Xv,Yv=creation_sin(-20,20,n,1) | |||||

| Xv,Yv=creation_sin(-3,3,n,w) | |||||

| model_ReLU=tf.keras.models.Sequential() | model_ReLU=tf.keras.models.Sequential() | ||||

| model_ReLU.add(tf.keras.Input(shape=(1,))) | model_ReLU.add(tf.keras.Input(shape=(1,))) | ||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(512, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(1)) | model_ReLU.add(tf.keras.layers.Dense(1)) | ||||

| model_ReLU.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_ReLU.fit(X, Y, batch_size=16, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_snake.add(tf.keras.Input(shape=(1,))) | model_snake.add(tf.keras.Input(shape=(1,))) | ||||

| model_snake.add(tf.keras.layers.Dense(64, activation=snake)) | |||||

| model_snake.add(tf.keras.layers.Dense(64, activation=snake)) | |||||

| model_snake.add(tf.keras.layers.Dense(64, activation=snake)) | |||||

| model_snake.add(tf.keras.layers.Dense(64, activation=snake)) | |||||

| model_snake.add(tf.keras.layers.Dense(64, activation=snake)) | |||||

| model_snake.add(tf.keras.layers.Dense(512, activation=snake)) | |||||

| model_snake.add(tf.keras.layers.Dense(1)) | model_snake.add(tf.keras.layers.Dense(1)) | ||||

| model_snake.summary() | model_snake.summary() | ||||

| model_snake.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_snake.fit(X, Y, batch_size=16, epochs=100, shuffle='True',validation_data=(Xv, Yv)) | |||||

| plt.figure() | plt.figure() | ||||

| plt.plot(X,Y,'x',label='donnée') | plt.plot(X,Y,'x',label='donnée') | ||||

| plt.plot(Xv,Yv,label="validation") | plt.plot(Xv,Yv,label="validation") | ||||

| plt.plot(X,Y_predis_snake,'o',label='prediction sur les donné avec snake comme activation') | |||||

| plt.plot(Xv,Y_predis_validation_snake,label='prediction sur la validation avec snake comme activation') | |||||

| plt.plot(X,Y_predis_snake,'o',label='prediction sur les données avec snake') | |||||

| plt.plot(Xv,Y_predis_validation_snake,label='prediction sur la validation avec snake') | |||||

| plt.legend() | plt.legend() | ||||

| plt.show() | plt.show() | ||||

+ 10

- 13

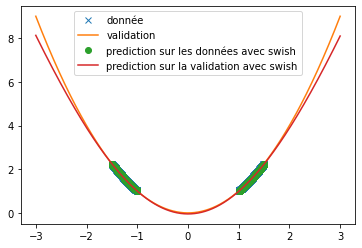

code/fonctions_activations_classiques/swish.py

Dosyayı Görüntüle

| from Creation_donnee import * | from Creation_donnee import * | ||||

| import numpy as np | import numpy as np | ||||

| n=20 | |||||

| w=10 | |||||

| n=20000 | |||||

| #création de la base de donnéé | #création de la base de donnéé | ||||

| X,Y=creation_sin(-15,-8,n,1,) | |||||

| X2,Y2=creation_sin(10,18,n,1,) | |||||

| X,Y=creation_x2(-2.5,-1,n) | |||||

| X2,Y2=creation_x2(1,1.5,n) | |||||

| X=np.concatenate([X,X2]) | X=np.concatenate([X,X2]) | ||||

| Y=np.concatenate([Y,Y2]) | Y=np.concatenate([Y,Y2]) | ||||

| n=10000 | n=10000 | ||||

| Xv,Yv=creation_sin(-20,20,n,1) | |||||

| Xv,Yv=creation_x2(-3,3,n) | |||||

| model_swish.add(tf.keras.Input(shape=(1,))) | model_swish.add(tf.keras.Input(shape=(1,))) | ||||

| model_swish.add(tf.keras.layers.Dense(64, activation='swish')) | |||||

| model_swish.add(tf.keras.layers.Dense(64, activation='swish')) | |||||

| model_swish.add(tf.keras.layers.Dense(64, activation='swish')) | |||||

| model_swish.add(tf.keras.layers.Dense(64, activation='swish')) | |||||

| model_swish.add(tf.keras.layers.Dense(64, activation='swish')) | |||||

| model_swish.add(tf.keras.layers.Dense(512, activation='swish')) | |||||

| model_swish.add(tf.keras.layers.Dense(1)) | model_swish.add(tf.keras.layers.Dense(1)) | ||||

| model_swish.summary() | model_swish.summary() | ||||

| model_swish.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_swish.fit(X, Y, batch_size=16, epochs=50, shuffle='True',validation_data=(Xv, Yv)) | |||||

| plt.figure() | plt.figure() | ||||

| plt.plot(X,Y,'x',label='donnée') | plt.plot(X,Y,'x',label='donnée') | ||||

| plt.plot(Xv,Yv,label="validation") | plt.plot(Xv,Yv,label="validation") | ||||

| plt.plot(X,Y_predis_swish,'o',label='prediction sur les donné avec swish comme activation') | |||||

| plt.plot(Xv,Y_predis_validation_swish,label='prediction sur la validation avec swish comme activation') | |||||

| plt.plot(X,Y_predis_swish,'o',label='prediction sur les données avec swish') | |||||

| plt.plot(Xv,Y_predis_validation_swish,label='prediction sur la validation avec swish') | |||||

| plt.legend() | plt.legend() | ||||

| plt.show() | plt.show() | ||||

BIN

code/fonctions_activations_classiques/tanh.png

Dosyayı Görüntüle

+ 16

- 21

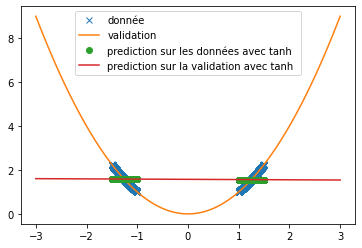

code/fonctions_activations_classiques/tanh_vs_ReLU.py

Dosyayı Görüntüle

| from Creation_donnee import * | from Creation_donnee import * | ||||

| import numpy as np | import numpy as np | ||||

| n=20 | |||||

| w=10 | |||||

| n=20000 | |||||

| #création de la base de donnéé | #création de la base de donnéé | ||||

| X,Y=creation_sin(-15,-8,n,1,) | |||||

| X2,Y2=creation_sin(10,18,n,1,) | |||||

| X,Y=creation_x2(-2.5,-1,n) | |||||

| X2,Y2=creation_x2(1,1.5,n) | |||||

| X=np.concatenate([X,X2]) | X=np.concatenate([X,X2]) | ||||

| Y=np.concatenate([Y,Y2]) | Y=np.concatenate([Y,Y2]) | ||||

| n=10000 | n=10000 | ||||

| Xv,Yv=creation_sin(-20,20,n,1) | |||||

| Xv,Yv=creation_x2(-3,3,n) | |||||

| model_ReLU=tf.keras.models.Sequential() | model_ReLU=tf.keras.models.Sequential() | ||||

| model_ReLU.add(tf.keras.Input(shape=(1,))) | model_ReLU.add(tf.keras.Input(shape=(1,))) | ||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(64, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(512, activation='relu')) | |||||

| model_ReLU.add(tf.keras.layers.Dense(1)) | model_ReLU.add(tf.keras.layers.Dense(1)) | ||||

| model_ReLU.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_ReLU.fit(X, Y, batch_size=16, epochs=5, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_tanh.add(tf.keras.Input(shape=(1,))) | model_tanh.add(tf.keras.Input(shape=(1,))) | ||||

| model_tanh.add(tf.keras.layers.Dense(64, activation='tanh')) | |||||

| model_tanh.add(tf.keras.layers.Dense(64, activation='tanh')) | |||||

| model_tanh.add(tf.keras.layers.Dense(64, activation='tanh')) | |||||

| model_tanh.add(tf.keras.layers.Dense(64, activation='tanh')) | |||||

| model_tanh.add(tf.keras.layers.Dense(64, activation='tanh')) | |||||

| model_tanh.add(tf.keras.layers.Dense(512, activation='tanh')) | |||||

| model_tanh.add(tf.keras.layers.Dense(1)) | model_tanh.add(tf.keras.layers.Dense(1)) | ||||

| model_tanh.summary() | model_tanh.summary() | ||||

| model_tanh.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_tanh.fit(X, Y, batch_size=16, epochs=50, shuffle='True',validation_data=(Xv, Yv)) | |||||

| plt.figure() | plt.figure() | ||||

| plt.plot(X,Y,'x',label='donnée') | plt.plot(X,Y,'x',label='donnée') | ||||

| plt.plot(Xv,Yv,label="validation") | plt.plot(Xv,Yv,label="validation") | ||||

| plt.plot(X,Y_predis_ReLU,'o',label='prediction sur les donné') | |||||

| plt.plot(Xv,Y_predis_validation_ReLU,label='prediction sur la validation') | |||||

| plt.plot(X,Y_predis_ReLU,'o',label='prediction sur les données avec ReLU') | |||||

| plt.plot(Xv,Y_predis_validation_ReLU,label='prediction sur la validation avec ReLU') | |||||

| plt.legend() | plt.legend() | ||||

| plt.show() | plt.show() | ||||

| plt.figure() | plt.figure() | ||||

| plt.plot(X,Y,'x',label='donnée') | plt.plot(X,Y,'x',label='donnée') | ||||

| plt.plot(Xv,Yv,label="validation") | plt.plot(Xv,Yv,label="validation") | ||||

| plt.plot(X,Y_predis_tanh,'o',label='prediction sur les donné avec tanh comme activation') | |||||

| plt.plot(Xv,Y_predis_validation_tanh,label='prediction sur la validation avec tanh comme activation') | |||||

| plt.plot(X,Y_predis_tanh,'o',label='prediction sur les données avec tanh ') | |||||

| plt.plot(Xv,Y_predis_validation_tanh,label='prediction sur la validation avec tanh ') | |||||

| plt.legend() | plt.legend() | ||||

| plt.show() | plt.show() | ||||

+ 11

- 12

code/fonctions_activations_classiques/x_sin.py

Dosyayı Görüntüle

| from Creation_donnee import * | from Creation_donnee import * | ||||

| import numpy as np | import numpy as np | ||||

| n=20 | |||||

| w=10 | |||||

| n=20000 | |||||

| #création de la base de donnéé | #création de la base de donnéé | ||||

| X,Y=creation_sin(-15,-8,n,1,) | |||||

| X2,Y2=creation_sin(10,18,n,1,) | |||||

| X,Y=creation_x2(-2.5,-1,n) | |||||

| X2,Y2=creation_x2(1,1.5,n) | |||||

| X=np.concatenate([X,X2]) | X=np.concatenate([X,X2]) | ||||

| Y=np.concatenate([Y,Y2]) | Y=np.concatenate([Y,Y2]) | ||||

| n=10000 | n=10000 | ||||

| Xv,Yv=creation_sin(-20,20,n,1) | |||||

| Xv,Yv=creation_x2(-3,3,n) | |||||

| model_xsin.add(tf.keras.Input(shape=(1,))) | model_xsin.add(tf.keras.Input(shape=(1,))) | ||||

| model_xsin.add(tf.keras.layers.Dense(64, activation=x_sin)) | |||||

| model_xsin.add(tf.keras.layers.Dense(64, activation=x_sin)) | |||||

| model_xsin.add(tf.keras.layers.Dense(64, activation=x_sin)) | |||||

| model_xsin.add(tf.keras.layers.Dense(64, activation=x_sin)) | |||||

| model_xsin.add(tf.keras.layers.Dense(64, activation=x_sin)) | |||||

| model_xsin.add(tf.keras.layers.Dense(512, activation=x_sin)) | |||||

| model_xsin.add(tf.keras.layers.Dense(1)) | model_xsin.add(tf.keras.layers.Dense(1)) | ||||

| model_xsin.summary() | model_xsin.summary() | ||||

| model_xsin.fit(X, Y, batch_size=1, epochs=10, shuffle='True',validation_data=(Xv, Yv)) | |||||

| model_xsin.fit(X, Y, batch_size=16, epochs=100, shuffle='True',validation_data=(Xv, Yv)) | |||||

| plt.figure() | plt.figure() | ||||

| plt.plot(X,Y,'x',label='donnée') | plt.plot(X,Y,'x',label='donnée') | ||||

| plt.plot(Xv,Yv,label="validation") | plt.plot(Xv,Yv,label="validation") | ||||

| plt.plot(X,Y_predis_xsin,'o',label='prediction sur les donné avec x+sin comme activation') | |||||

| plt.plot(Xv,Y_predis_validation_xsin,label='prediction sur la validation avec x+sin comme activation') | |||||

| plt.plot(X,Y_predis_xsin,'o',label='prediction sur les données avec x+sin ') | |||||

| plt.plot(Xv,Y_predis_validation_xsin,label='prediction sur la validation avec x+sin') | |||||

| plt.legend() | plt.legend() | ||||

| plt.show() | plt.show() | ||||

Loading…